19 KiB

- Discrete Math Notes [2024-03-30 Sat 22:51]

Discrete Math Notes [2024-03-30 Sat 22:51] journal cs2233 college discrete_math

Laws of Propositional Logic

Domination Laws

- $p ∧ F ≡ F$

- $p ∨ ≡ T$

Double Negation Law

- $¬¬p ≡ p$

Complement Laws

- $p ∧ ¬p ≡ F$

- $¬T ≡ F$

- $p ∨ ¬p ≡ T$

- $¬F ≡ T$

De Morgan's Laws

- $¬(p ∨ q) ≡ ¬p ∧ ¬q$

- $¬(p ∧ q) ≡ ¬p ∨ ¬q$

Absorption Laws

- $p ∨ (p ∧ q) ≡ p$

- $p ∧ (p ∨ q) ≡ q$

Idempotent Laws

- $p ∨ p ≡ p$

- $p ∧ p ≡ p$

Associative Laws

Modifying the location of grouping doesn't modify the output among like operators.

- $(p ∨ q) ∨ r ≡ p ∨ (q ∨ r)$

- $(p ∧ q) ∧ r ≡ p ∧ (q ∧ r)$

Commutative Laws

Modifying the location of variables doesn't modify the output around like operators.

- $(p ∨ q) ≡ (q ∨ p)$

- $(p ∧ q) ≡ (q ∧ p)$

Distributive Laws

- $p ∨ (q ∧ r) ≡ (p ∨ q) ∧ (p ∨ r)$

- $p ∧ (q ∨ r) ≡ (p ∧ q) ∨ (p ∧ r)$

Identity Laws

- $p ∨ F ≡ p$

- $p ∧ T ≡ T$

Conditional Identities

- $p → q ≡ ¬p ∨ q$

- $p ↔ q ≡ (p → q) ∧ (q → p)$

Even and Odd Integers

- An integer $x$ is even if there is an integer $k$ such that $x = 2k$

- An integer $x$ is odd if there is an integer $k$ such that $x = 2k + 1$

Parity

- The parity of a number is whether the number is odd or even. If two numbers are both even or both odd, then the two numbers have the same parity. If one number is odd and the other is even is even, then the two numbers have opposite parity.

Rational Numbers

- A number $r$ is rational if there exist integers $x$ and $y$ such that $y ≠ 0$ and $r = x/y$.

Divides

- An integer $x$ divides an integer $y$ if and only if $x ≠ 0$ and $y = kx$, for some integer $k$. The fact that $x$ divides $y$ is denoted $x | y$. If $x$ does not divide $y$, then the fact is denoted $x ∤ y$.

Prime and Composite Numbers

- An integer $n$ is prime if and only if $n > 1$, and the only positive integers that divide $n$ are $1$ and $n$.

- An integer $n$ is composite if and only if $n > 1$, and there is an integer $m$ such that $1 < m < n$ and $m$ divides $n$.

Consecutive Integers

- Two integers are consecutive if one of the numbers is equal to $1$ plus the other number. Effectively $n = m + 1$.

Proof by Contrapositive

- A proof by contrapositive proves a conditional theorem of the form $p → c$ by showing that the contrapositive $¬c → ¬p$ is true. In other words, $¬c$ is assumed to be true and $¬p$ is proven as a result of $¬c$.

Sets

Common Mathematical Sets

| Set | Symbol | Examples of Elements |

|---|---|---|

| Natural Numbers | ℕ | 0,1,2,3,… |

| Integers | ℤ | …,-2,-1,0,1,2,… |

| Rational Numbers | ℚ | 0, 1/2, 5.23,-5/3, 7 |

| Irrational Numbers | ℙ | π, √2, √5/3, -4/√6 |

| Real Numbers | ℝ | 0, 1/2, 5.23, -5/3, π, √2 |

Subset

- If every element in $A$ is also an element of $B$, then $A$ is a subset of $B$, denoted as $A ⊆ B$.

- If there is an element of $A$ that is not an element of $B$, then $A$ is not a subset of $B$, denoted as $A ⊈ B$.

- If the universal set is $U$, then for every set $A$: $Ø ⊆ A ⊆ U$

- If $A ⊆ B$ and there is an element of $B$ that is not an element of $A$ (i.e. $A ≠ B$), then $A$ is a proper subset of $B$, denoted as $A ⊂ B$.

Power Set

- The power set of a set $A$, denoted $P(A)$, is the set of all subsets of $A$. For example if $A = {1,2,3}$, then: $P(A) = {Ø,{1},{2},{3},{1,2},{1,3},{2,3},{1,2,3}}$

Cardinality of a Power Set

- Let $A$ be a finite set of cardinality $n$. Then the cardinality of the power set of $A$ is $2^n$, or $|P(A)| = 2^n$

Set Operations

Set Intersection

- Let $A$ and $B$ be sets. The $intersection$ of $A$ and $B$, denoted $A ∩ B$ and read "$A$ intersect $B$", is the set of all elements that are elements of both $A$ and $B$.

Set Union

- The union of two sets, $A$ and $B$, denoted $A ∪ B$ and read "$A$ union $B$", is the set of all elements that are elements of $A$ or $B$. The definition for union uses the inclusive or, meaning that if an element is an element of both $A$ and $B$, then it also and element of $A ∪ B$.

Set Difference

- The difference between two sets $A$ and $B$, denoted $A - B$, is the set of elements that are in $A$ but not in $B$.

- The difference operation is not commutative since it is not necessarily the case that $A - B = B - A$. Consider that $3 - 2 ≠ 2 - 3$, same applies for the set difference operator.

Set Symmetric Difference

- The symmetric difference between two sets, $A$ and $B$, denoted $A ⊕ B$, is the set of elements that are a member of exactly one of $A$ and $B$, but not both. An alternate definition of the symmetric difference operation is: $A ⊕ B = (A - B) ∪ (B - A)$

Set Complement

- The complement of a set $A$, denoted $ ̅A$, is the set of all elements in $U$ (the universal set) that are not elements of $A$. An alternate definition of $ ̅A$ is $U - A$.

Summary of Set Operations

| Operation | Notation | Description |

|---|---|---|

| Intersection | $A ∩ B$ | ${x: x ∈ A and x ∈ B}$ |

| Union | $A ∪ B$ | ${x: x ∈ A and x ∈ B or both}$ |

| Difference | $A - B$ | ${x: x ∈ A and x ∉ B}$ |

| Symmetric Difference | $A ⊕ B$ | ${x: x ∈ A - B or x ∈ B - A}$ |

| Complement | $ ̅A$ | ${x: x ∉ A}$ |

Set Identities

- A set identity is an equation involving sets that is true regardless of the contents of the sets in the expression.

- Set identities are similar to Laws of Propositional Logic

-

The sets $U$ and $Ø$ correspond to the constants true (T) and false (F):

- $A ∈ Ø ↔ F$

- $A ∈ U ↔ T$

Cartesian Products

- An ordered pair of items is written $(x,y)$

- The first entry of the ordered pair $(x,y)$ is $x$ and the second entry is $y$

- The use of parentheses $()$ for an ordered pair indicates that the order of the entries is significant, unlike sets which use curly braces ${}$

- Two ordered pairs $(x,y)$ and $(u,w)$ are equal if and only if $x = u$ and $y = w$

- For two sets, $A$ and $B$, the Cartesian product of $A$ and $B$ denoted $A × B$, is the set of all ordered pairs in which the first entry is in $A$ and the second entry is in $B$. That is: $A × B = {(a,b): a ∈ A and b ∈ B}$

- Since the order of the elements in a pair is significant, $A × B$ will not be the same as $B × A$, unless $A = B$, or either $A$ or $B$ is empty. If $A$ and $B$ are finite sets then $|A × B| = |A| × |B|$

Strings

- A sequence of characters is called a string

- The set of characters used in a set of strings is called the alphabet for the set of strings

- The length of a string is the number of characters in the string

-

A binary string is a string whose alphabet is {0,1}

- A bit is a character in a binary string

- A binary string of length $n$ is also called an $n$-bit string

- The set of binary strings of length $n$ is denoted as ${0,1}^n$

- The empty string is the unique string whose length is 0 and is usually denoted by the symbol $λ$. Since ${0,1}^0$ is the set of all binary strings of length 0, ${0,1}^0 = {λ}$

-

If $s$ and $t$ are two strings, then the concatenation of $s$ and $t$ (denoted $st$) is the string obtained by putting $s$ and $t$ together

- Concatenating any string $x$ with the empty string ($λ$) gives back: $x: xλ = x$

Functions

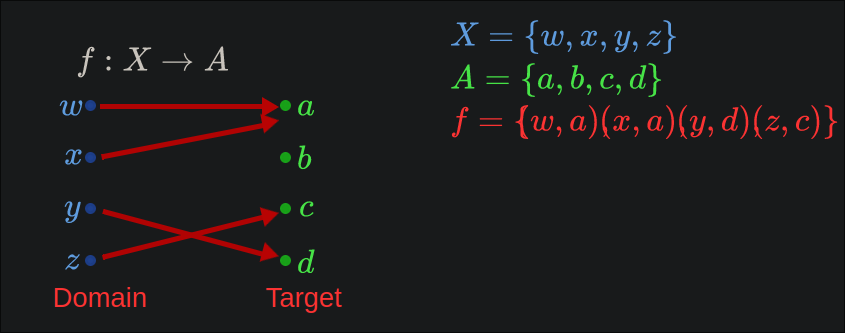

Definition of a function:

A function $f$ that maps elements of a set $X$ to elements of a set $Y$, is a subset of $X × Y$

such that for every $x ∈ X$, there is exactly one $y ∈ Y$ for which $(x,y) ∈ f$.

$f: X → Y$ is the notation to express the fact that $f$ is a function from $X$ to $Y$. The set

$X$ is called the _*domain*_ of $f$, and the set $Y$ is the _*target*_ of $f$. An alternate word

for target that is sometimes used is _*co-domain*_. The fact that $f$ maps $x$ to $y$

(or $(x,y) ∈ f$) can also be denoted as $f(x) = y$- If $f$ maps an element of the domain to zero elements or more than one element of the target, then $f$ is not well-defined.

- If $f$ is a function mapping $X$ to $Y$ and $X$ is a finite set, then the function $f$ can be specified by listing the pairs $(x,y)$ in $f$. Alternatively, a function with a finite domain can be expressed graphically as an arrow diagram.

-

In an arrow diagram for a function $f$, the elements of the domain $X$ are listed on the left and the elements of the target $Y$ are listed on the right. There is an arrow diagram from $x ∈ X$ to $y ∈ Y$ if and only if $(x,y) ∈ f$. Since $f$ is a function, each $x ∈ X$ has exactly one $y ∈ Y$ such that $(x,y) ∈ f$, which means that in the arrow diagram for a function, there is exactly one arrow pointing out of every element in the domain.

- See the following example arrow diagram:

- See the following example arrow diagram:

-

For function $f: X → Y$, an element $y$ is in the range of $f$ if and only if there is an $x ∈ X$ such that $(x,y) ∈ f$.

- Expressed in set notation: Range of $f = {y: (x,y) ∈ f, for some x ∈ X}$

Function Equality

- Two functions, $f$ and $g$, are equal if $f$ and $g$ have the same domain and target, and $f(x) = g(x)$ for every element $x$ in the domain. The notation $f = g$ is used to denote the fact that functions $f$ and $g$ are equal.

The Floor Function

- The floor function maps a real number to the nearest integer in the downwards direction

- The floor function is denoted as: $floor(x) = ⌊x⌋$

The Ceiling Function

- The ceiling function rounds a real number to the nearest integer in the upwards direction

- The ceiling function is denoted as: $ceiling(x) = ⌈x⌉$

Properties of Functions

- A function $f: X → Y$ is one-to-one or injective if $x_1 ≠ x_2$ implies that $f(x_1) ≠ f(x_2)$. That is, $f$ maps different elements in $X$ to different elements in $Y$.

- A function $f: X → Y$ is onto or surjective if the range of $f$ is equal to the target $Y$. That is, for every $y ∈ Y$, there is an $x ∈ X$ such that $f(x) = y$.

- A function is bijective if it is both one-to-one and onto. A bijective function is called a bijection. A bijection is also called a one-to-one correspondence.

Composition of Functions

- The process of applying a function to the result of another function is called composition.

-

Definition of function composition

$f$ and $g$ are two function, where $f: X → Y$ and $g: Y → Z$. The composition of $g$ with $f$, denoted $g ○ f$, is the function ($g ○ f): X → Z$, such that for all $x ∈ X, (g ○ f)(x) = g(f(x))$ - Composition is associative

-

The identity function always maps a set onto itself and maps every element onto itself.

- The identity function on $A$, denoted $I_A: A → A$, is defined as $I_A(a) = a$, for all $a ∈ A$

-

If a function $f$ from $A$ to $B$ has an inverse, then $f$ composed with its inverse is the indentity function. If $f(a) = b$, then $f^-1(b) = a$, and $(f^-1 ○ f)(a) = f^-1(f(a)) = f^-1(b) = a$.

- Let $f: A → B$ be a bijection. Then $f^-1 ○ f = I_A$ and $f ○ f^-1 = I_B$

Computation

Introduction to Algorithms

- An algorithm is a step-by-step method for solving a problem. A description of an algorithm specifies the input to the problem, the output to the problem, and the sequence of steps to be followed to obtain the output from the input.

-

A description of an algorithm usually includes

- A name for the algorithm

- A brief description of the task performed by the algorithm

- A description of the input

- A description of the output

- A sequence of steps to follow

- Algorithms are often described in pseudocode, which is a language between written English and a computer language

- An important type of step in an algorithm is an assignment, in which a variable is given a variable. The assignment operated is denoted by $:=$, for example to assign $x$ to $y$ you would write it as: $x := y$.

-

The output of an algorithm is specified by a return statement.

- For example, the statement $Return(x)$ would return the value of $x$ as the output of the algorithm.

- A return statement in the middle of an algorithm causes the execution of the algorithm to stop at that point and return the indicated value

The if-statement and the if-else-statement

- An if-statement tests a condition, and executes one or more instructions if the condition evaluates to true. In a single line if-statement, a conditon and instruction appear on the same line.

- An if-else-statement tests a condition, executes one or more instructions if the condition evaluates to true, and executes a different set of instructions in the condition evaluates to false.

The for-loop

- In a for-loop, a block of instructions is executed a fixed number of times as specified in the first line of the for-loop, which defines an index, a starting value for the index, and a final value for the index.

- Each repetition of the block of instructions inside the for-loop is called an iteration.

- The index is initially assigned a value equal to the starting value, and is incremented just before the next iteration begins.

- The final iteration of the for-loop happens when the index reaches the final value.

The while-loop

- A while-loop iterates an unknown number of times, ending when a certain condition becomes false.

Asymptotic Growth of Functions

- The asymptotic growth of the function $f$ is a measure of how fast the output $f(n)$ grows as the input $n$ grows.

- Classification of functions using Oh, $Ω$, and $Θ$ notation (called asymptotic notation) provides a way to concisely characterize the asymptotic growth of a function.

O-notation

- The notation $f = O(g)$ is read "$f$ is Oh of $g$". $f = O(g)$ essentially means that the function $f(n)$ is less than or equal to $g(n)$, if constant factors are omitted and small values for $n$ are ignored.

- The $O$ notation serves as a rough upper bound for functions (disregarding constants and small input values)

- In the expressions $7n^3$ and $5n^2$, the $7$ and the $5$ are called constant factors because the values of $7$ and $5$ do not depend on the variable $n$.

- If $f$ is $O(g)$, then there is a positive number $c$ such that when $f(n)$ and $c × g(n)$ are graphed, the graph of $c × g(n)$ will eventually cross $f(n)$ and remain higher than $f(n)$ as $n$ gets large.

-

Definition: Oh notation

Let $f$ and $g$ be functions from $ℤ^+$ to $ℝ^>=$. Then $f = O(g)$ if there are positive real numbers $c$ and $n_0$ such that for any $n ∈ ℤ^+$ such that $n >= n_0$: $f(n) <= c × g(n)$. - The constants $c$ and $n_0$ in the definition of Oh-notation are said to be a witness to the fact that $f = O(g)$.

Ω Notation

- The $Ω$ notation provides a lower bound on the growth of a function

-

$Ω$ notation definition:

Let $f$ and $g$ be functions from $ℤ^+$ to $ℝ^>=$. Then $f = Ω(g)$ if there are positive real numbers $c$ and $n_0$ such that for any $n ∈ ℤ^+$ such that for $n >= n_0$, $f(n) >= c × g(n)$ The notation $f = Ω(g)$ is read "$f$ is _*Omega*_ of $g$". -

Relationship between Oh-notation and $Ω$-notation:

Let $f$ and $g$ be functions from $ℤ^+$ to $ℝ^>=$. Then $f = Ω(g)$ if and only if $g = O(f)$

𝛩 notation and polynomials

- The $𝛩$ notation indicates that two functions have the same rate of growth

-

$𝛩$-notation definition:

Let $f$ and $g$ be functions from $ℤ^+$ to $ℝ^>=$. $f = 𝛩(g)$ if $f = O(g)$ and $f = Ω(g)$ The notation $f = 𝛩(g)$ is read "$f$ is *_Theta of_* $g(n)$" - If $f = 𝛩(g)$, then $f$ is said to be order of $g$.

- Lower order terms are those that can be removed from $f$ that does not change the order of $f$.

Asymptotic growth of polynomials

Let $p(n)$ be a degree-$k$ polynomial of the form:

$p(n) = a_kn^k + ... + a_1n + a_0$

in which $a_k > 0$. Then $p(n)$ is $𝛩(n^k)$Asymptotic growth of logarithm functions with different bases

Let $a$ and $b$ be two real numbers greater than $1$, then $log_a(n) = 𝛩(log_b(n))$

Growth rate of common functions in analysis of algorithms

- A function that does not depend on $n$ at all is called a constant function. $f(n) = 17$ is an example of a constant function.

- Any constant function is $𝛩(1)$.

- If a function $f(n)$ is $𝛩(n)$, we say that $f(n)$ is a

linearfunction. - A function $f(n)$ is said to be polynomial if $f(n)$ is $𝛩(n^k)$ for some positive real number $k$.

Common functions in algorithmic complexity.

The following table of functions are given in increasing order of complexity, with the $m$ in the third from the bottom being any $m > 3$: Table here

Rules about asymptotic growth

Analysis of algorithms

- The amount of resources used by an algorithm is referred to as the algorithm's computational complexity.

- The primary resources to optimize are the time the algorithm requires to run (time complexity) and the amount of memory used (space complexity).

- The time complexity of an algorithm is defined by a function $f: ℤ^+ → ℤ^+$ such that $f(n)$ is the maximum number of atomic operations performed by the algorithm on any input of size $n$. $ℤ^+$ is the set of positive integers.

- The asymptotic time complexity of an algorithm is the rate of asymptotic growth of the algorithm's time complexity function $f(n)$.

Worst-case complexity

- The worst-case analysis of an algorithm evaluates the time complexity of the algorithm on the input of a particular size that takes the longest time.

- The worst-case time complexity function $f(n)$ is defined to be the maximum number of atomic operations the algorithm requires, where the maximum is taken over all inputs of size $n$.

- When proving an upper bound on the worst-case complexity of an algorithm (using $O$-notation), the upper bound must apply for every input of size $n$.

- When proving a lower bound for the worst-case complexity of an algorithm (using $Ω$-notation), the lower bound need only apply for at least one possible input of size $n$.

- Average-case analysis evaluates the time complexity of an algorithm by determining the average running time of the algorithm on a random input.